Scientifica’s picks of the best neuroscience research from July 2019

Included in Scientifica’s selection of the best neuroscience stories from July are the first multi-person brain-to-brain interface for solving tasks, using mirror therapy to treat phantom limb pain and a computer system that can predict how a person will feel when they see an image.

1. Microrobots activated by laser pulses show promise for treating tumours

Microrobots that can deliver drugs to specific sites in the body, while being monitored and controlled from outside the body, have been developed by scientists at Caltech.

The microrobots have been developed to treat tumours in the digestive tract. They are formed from microscopic spheres of magnesium that are coated by thin layers of gold and paryleme, a polymer that cannot be digested. A circular portion of the magnesium sphere remains uncovered, this reacts with the fluids in the digestive tract to produce small bubbles which propel the sphere forward until it collides with tissue. A layer of medication is placed between the microsphere and its parylene coat, before the microrobot is encased in microcapsules of paraffin wax to protect it from the acidic environment of the stomach.

Photoacoustic computed tomography (PACT) is then used to deliver the microrobots to the target location. This uses pulses of infrared laser light, which pass through tissue and are absorbed by haemoglobin in red blood cells, causing the red blood cells to ultrasonically vibrate. These vibrations are detected by sensors on the skin and enable tumours to be detected, as well as the location of microrobots to be tracked.

Once the microrobots reach the tumour, they are activated by a high-power continuous-wave near-infrared laser beam. When they absorb the infrared light, they briefly heat up, melting the wax capsule encasing them and exposing them to digestive fluids. This activates the microrobots' bubble jets, causing the microrobots to swarm. The jets are not steerable, so not all of the microrobots hit the targeted area, but many will. When they do, they stick to the surface and begin releasing their medication.

More about the microrobots

2. Researchers discover the science behind giving up

A study by the University of Washington has identified the role of nociceptin neurons in giving up. The team found that these neurons become more active before a mouse reaches breakpoint.

In the experiment, a mouse needed to place its snout into a port in order to receive a sucrose reward. The sucrose became harder to obtain as the experiment went on. The researchers found that the nociception neurons became more active as the mouse was working harder and not receiving the reward.

Nociceptin neurons are located near the ventral tegmental area of the brain, which contains neurons that release dopamine during pleasurable activities. It was found that these neurons release nociception, which suppresses dopamine, telling the mouse to give up.

This research could help scientists to develop better treatments for disorders that are caused by dopamine dysregulation, including depression and addiction, by modifying the levels of dopamine released by the brain.

Find out more

3. Scientists have created the first multi-person brain-to-brain interface for solving tasks

Researchers at the University of Washington have created a game where people work together to complete it using only their minds.

The tetris-like game, called BrainNet, contains the first non-invasive direct brain-to-brain interface that can be used by multiple people for problem-solving. In order to communicate with each other, players wear special electroencephalography caps which can pick up the wearer’s brain signals, translate the information and pass it to another player via the internet. To deliver the signal to the receiving player, a cable is used to stimulate the part of the brain that translates signals from the eyes. This tricks the brain so the player sees bright arcs or objects appearing before their eyes.

Reading minds

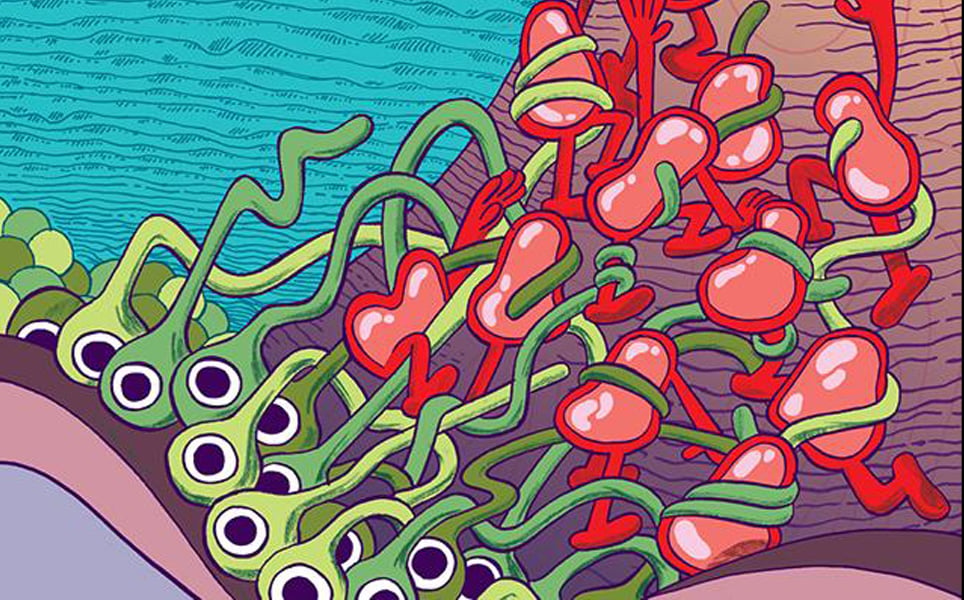

4. Columbia researchers controlled behaviour In a mouse’s brain with single-cell precision

Columbia University researchers have, for the first time, controlled the visual behaviour of a mouse by activating neurons in its visual cortex with single-cell precision.

The research provided evidence that groups of neurons, called neuronal ensembles, play a role in behaviour. Using two-photon calcium imaging, the team identified cortical ensembles in mice while they performed a visual task. They then used high-resolution optogenetics to precisely stimulate target neurons. Activating neurons that related to the visual task improved the animal’s performance, whereas activating neurons that were unrelated to the task decreased performance.

Being able to identify and stimulate neuronal ensembles with single-cell precision could be utilised in conditions caused by abnormal activity patterns, such as Alzheimer’s disease and schizophrenia.

Precise neuronal stimulation

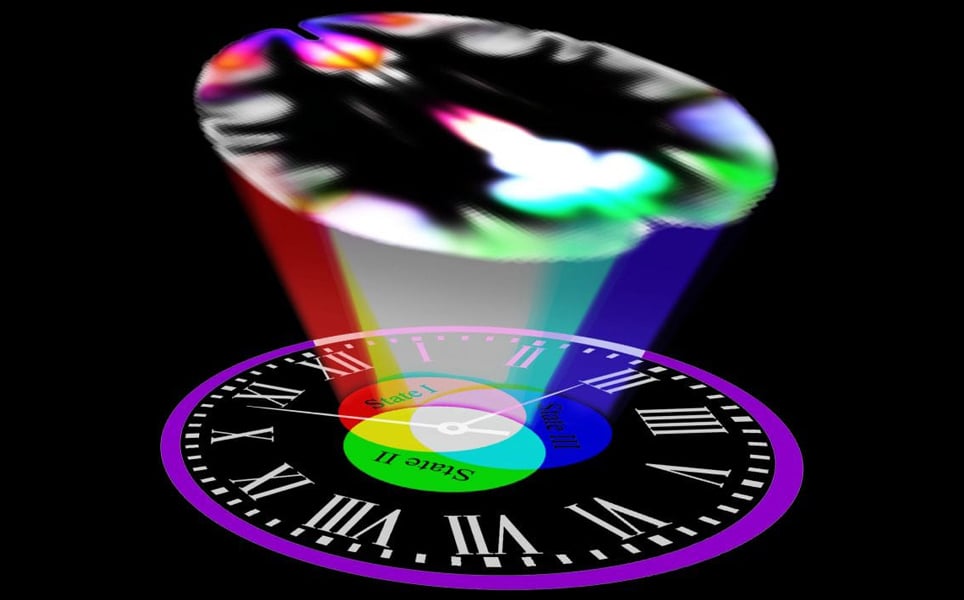

5. Structure of brain networks is not fixed

Using data acquired using functional magnetic resonance imaging (fMRI), researchers at Georgia State University have discovered that the shape and functional connectivity of brain networks can change over time.

Brain networks result from neurons in discrete areas of the brain communicating to enable the performance of cognitive tasks. These networks have previously been thought to be spatially static, with a fixed set of brain regions contributing to each network. However, the researchers saw in fMRI brain images taken over several minutes that the size, location and function of the networks change rapidly.

This research will be further developed and it is hoped it will give researchers an insight into the changes in brain networks that occur in patients with schizophrenia and other brain disorders.

The changing brain

6. UCI researchers’ deep learning algorithm solves Rubik’s Cube faster than any human

Scientists at the University of California, Irvine, have developed DeepCubeA, a deep reinforcement learning algorithm that can solve a Rubik’s Cube in a fraction of a second.

The algorithm requires no specific knowledge or coaching from humans. It successfully solved 100 percent of all test configurations, including finding the shortest path to the goal state 60 percent of the time. The researchers aim to use projects like this to help them build the next generation of artificial intelligence systems.

Learn more

7. Hit it where it hurts – how mirrors cure phantom pain

Phantom limb pain, when patients feel intense pain in a missing limb, is very difficult to treat using drugs. Mirror therapy has long been used to treat the condition; this is where patients perform symmetrical exercises in a mirror so they see their phantom limb move as though it is really there.

Scientists at Brunel University have mapped how mirror therapy changes the brain, which may soon enable them to predict how well it will work in different patients.

Tailoring therapy

8. A computer system that knows how you feel

Scientists have reprogrammed an existing neural network (a computer system modelled from the brain) which enables computers to recognise objects, known as AlexNet, to allow it to predict how a person would feel when they see a certain image.

The researchers at the University of Colorado Boulder showed this modified network, named EmoNet, 25,000 images and asked it to categorise them into 20 different categories, from horror to craving. EmoNet was able to accurately and consistently categorise 11 types of emotion, successfully identifying photos that evoke craving or sexual desire with more than 95% accuracy, but struggling to identify emotions such as confusion, awe and surprise.

The team then used fMRI to measure the brain activity of 18 human subjects as they were shown 4-second flashes of 112 images, at the same time as they were shown to EmoNet. The patterns of activity in the occipital lobe of humans matched that of the units in EmoNet that code for specific emotions, demonstrating that EmoNet had learned to encode emotions in a biologically plausible way.

Computing emotions

9. Genes underscore five psychiatric disorders

In a collaboration between the University of Queensland, Australia and Vrije Universiteit, Amsterdam, the genes that contribute to the development of ADHD, autism spectrum disorder, bipolar disorder, major depression and schizophrenia have been identified.

In order to determine the genes that cause these psychiatric disorders, the researchers analysed more than 400,000 individuals. After observing that multiple family members often have a mental illness, but not necessarily the same one, the team wanted to investigate if specific sets of genes are involved in the development of multiple disorders.

It was found that there are a common set of genes that are highly expressed in the brain that increase your risk of developing all five disorders, due to the shared biological mechanisms that affect all of the disorders. This finding could lead to the development of new drugs that target these shared biological pathways.

Insight into psychiatric disorders

10. Team IDs spoken words and phrases in real-time from brain’s speech signals

Scientists at UC San Francisco are a step closer to treating people with speech loss after showing that brain activity recorded as research participants spoke could be used to create realistic synthetic versions of that speech.

In previous studies, it took the researchers months to translate the brain activity into speech. However, in a recent study, the scientists decoded spoken words from the brain signals that control speech in real-time.

It is hoped that this technique could be used for patients who are unable to speak as a result of facial paralysis due to brainstem stroke, spinal cord injury or neurodegenerative disease. In these patients, the brain regions that control the muscles of the jaw, lip, tongue and larynx are often intact and active. Therefore, the speech signals of these patients could be decoded in order to reveal what they are trying to say.

Decoding speech signals

Banner image credit: Columbia University

Take a look at the previous top neuroscience stories...

Sign up to receive our latest news

Find out about Scientifica's latest product releases, company news, and developments through a range of news articles, customer interviews and product demonstration videos.

)